Reading time: 9 minutes

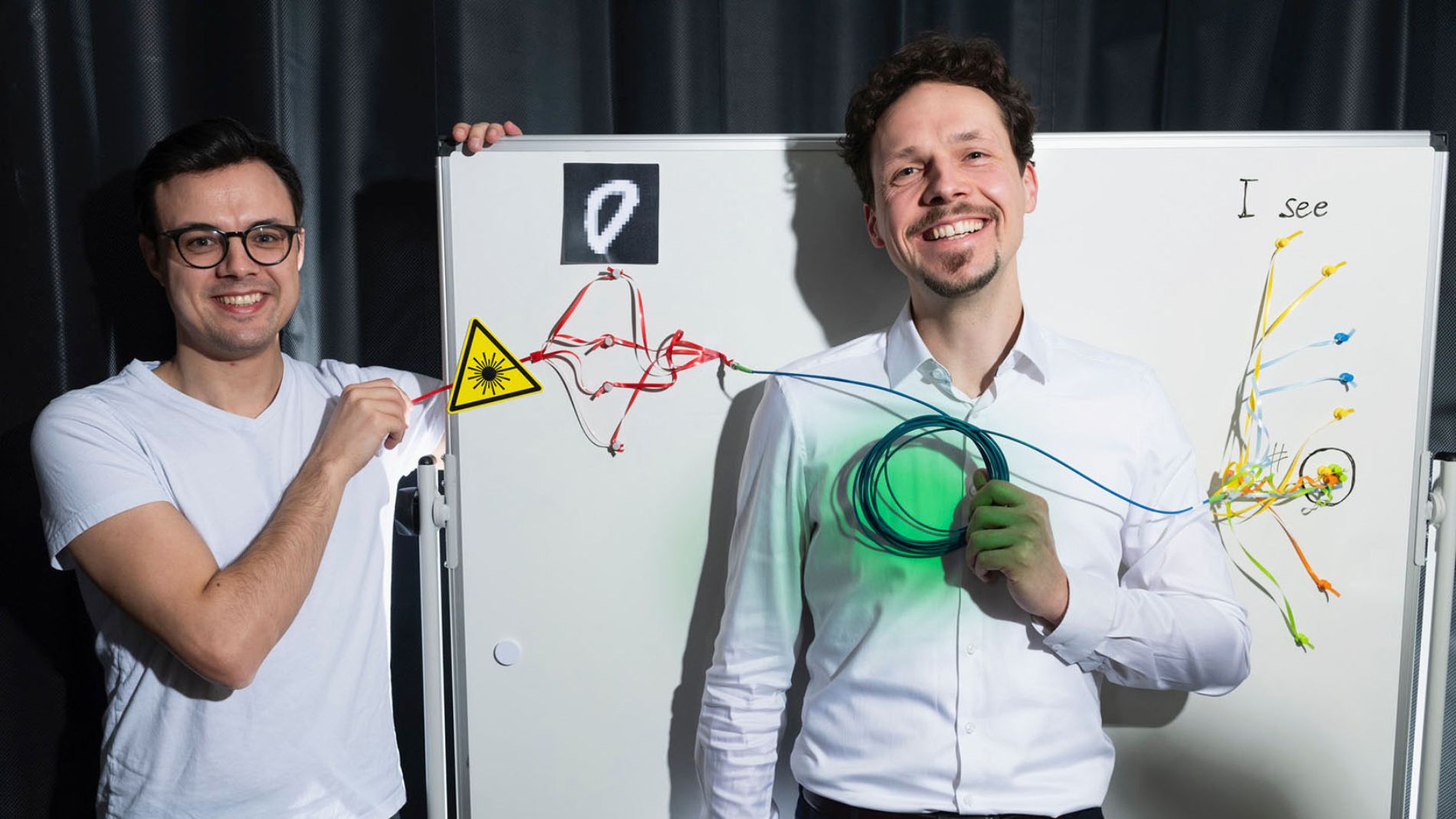

Dr Mario Chemnitz is Head of the Smart Photonics Department at the Leibniz Institute of Photonic Technology and Junior Professor of Intelligent Photonic Systems at Friedrich Schiller University in Jena. The team has developed a new technology that could reduce the high energy requirements of AI systems and speed up data processing. The neuronal networks of the human brain serve as inspiration.

Anna Moldenhauer: Together with Dr Bennet Fischer from the Leibniz Institute of Photonic Technology (Leibniz IPHT) and an international team, you set out to develop energy-efficient computing systems – how did the research come about?

Dr Chemnitz: With regard to the potential of neural networks. We live in a time in which artificial intelligence is becoming increasingly important and also increasingly profound. It is transforming our entire society. The field of research itself is already several decades old, with the major achievements having been made in the last ten to twenty years. The computational concept of ‘deep learning’ was particularly decisive. This means that we are now able to mathematically model deep neural networks and also find training methods to enable them to solve complex problems. We can now start to use these deep networks for tasks that we would describe as intelligent. Tasks where you have to think outside the box. Like analysing a large amount of data points in biology – tens of thousands of cells in a small area in a blood count. To find out which are good and which are not, a highly trained specialist would have to spend a long time analysing the cluster of cells on a microscope image, which is not easy depending on the image. The neural networks can be trained to such an extent on small formats that they can perform this task quickly and with very high accuracy.

This is just one example of an application, another would be language models. These require the ability to recognise complex patterns in order to infer the next word in a word sequence. The areas of application are therefore very diverse. The problem with neural networks is their enormously high energy consumption. We are not far from building nuclear power stations for a single supercomputer that only supports one large language model. Microsoft recently took over the decommissioned US nuclear power plant Three Mile Island to generate energy for a new data centre. A 20-year power supply contract was concluded with the utility Constellation Energy for this purpose. The International Energy Agency also predicts that the energy consumption of AI and cryptocurrencies will be roughly equivalent to Japan's entire energy consumption by 2026 – over 1,000 terawatt hours.

This is so critical that even the major chip manufacturers are now suggesting that digital computer hardware will not be able to deliver this performance in the long term. In the long term, artificial intelligence will offer solutions to many problems, including climate protection. But the way to get there is highly damaging. This dilemma inspires us researchers to find new approaches. This is a highly interdisciplinary field with experts from physics, engineering and computer science. We are trying to create energy-efficient AI models and AI hardware and to expand the concept of neural computing. We have taken a small step with our first publication.

"In order to significantly reduce the high energy requirements of AI systems in the future, the process utilises light for neural computing and is based on the neural networks of the human brain. The processors should be able to recognise and process the information contained in the light’ – so much for the press release. Can you break down a little how the processes work?

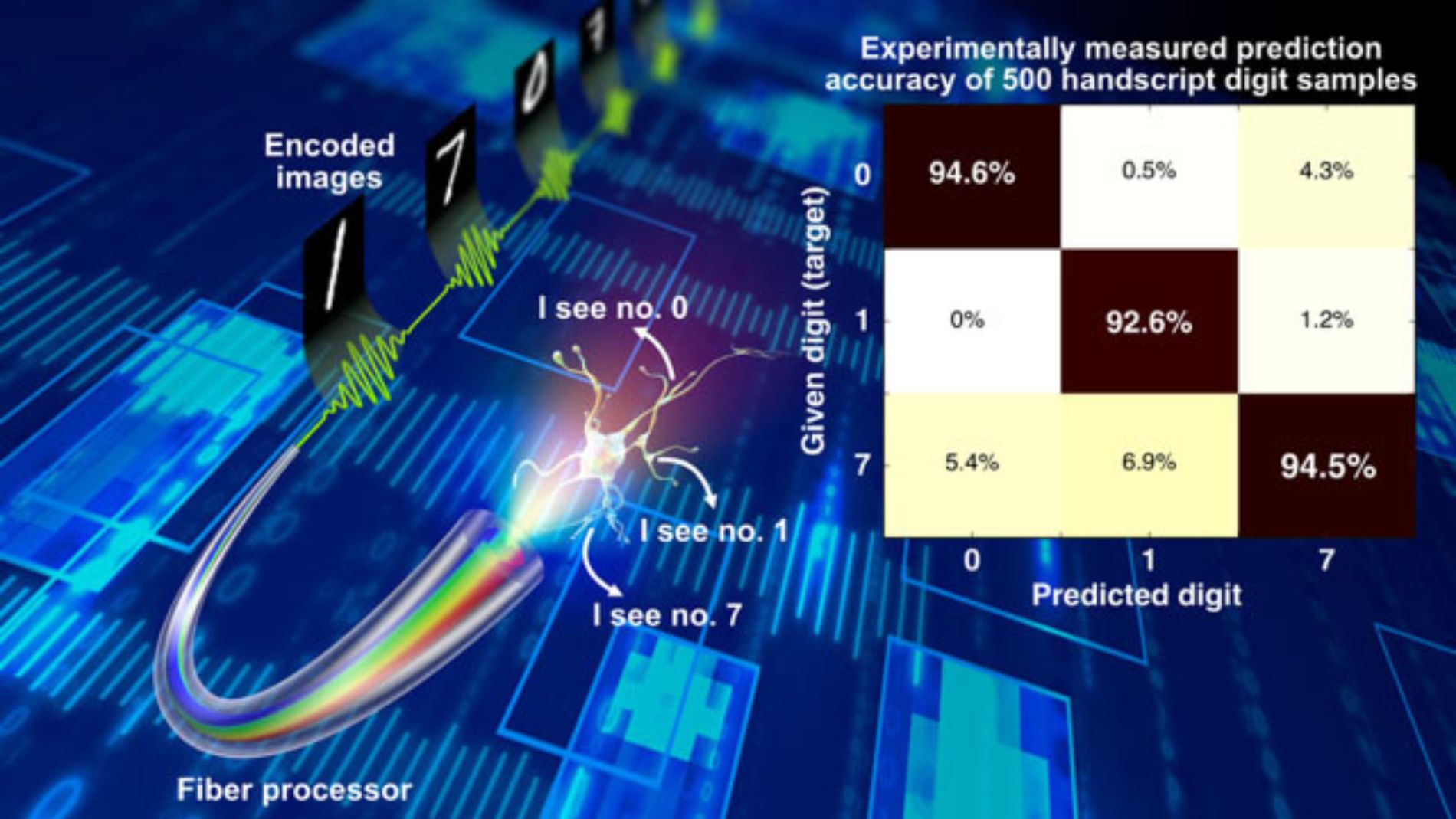

Dr Chemnitz: We are talking about two different fields of application when it comes to computing with light. The first end is to replace the digital computing structure that we currently have in a computer. We are working towards high-end processors that make it possible to easily calculate general artificial intelligence. Instead of the graphics card for the important vector-matrix multiplications that are crucial in a neural network, we are trying to develop photonic processors or optical processors that can perform exactly these vector-matrix multiplications. The beauty of light in this context is that it happens with almost no energy input because we can represent vector-matrix multiplication with natural physical processes. This sounds very complex at first, but we are actually talking about simple light diffraction on data-laden structures. The energy is then only in the light source and in the detection.

We also hope that we will be able to make the computing processes more compact and integrate them into a single computing unit. In the second and best-case scenario, we could create freely programmable, deep networks using a fully optical computing architecture. The data would then no longer be stored on a hard disc, but in the light field. With the help of optical neural networks, AI applications could be accelerated in an energy-efficient manner. We are thinking about edge processors for this. These are highly energy-efficient small computing units that work in the relevant domain where the information is already available.

In optics, for example, it is the information in the light field on the basis of which cameras and microscopes function. This means that signal information that is already in the optical domain no longer needs to be recorded and chased through the supercomputer, which consumes a lot of energy. Instead, how about equipping camera systems with intelligent optics that do not reconstruct the image, but tell us directly where a complex building or a defect in a structural matrix can be found? Being able to recognise these weak points with optical AI can be important in architecture, for example. There are also opportunities here for analysing materials: scanning a metal beam with a light field is enough to detect an inconsistency. Manual image processing would also no longer be necessary, as the intelligent optics would be trained to select and combine the appropriate filters independently.

When you speak of light in this context, what exactly do you mean?

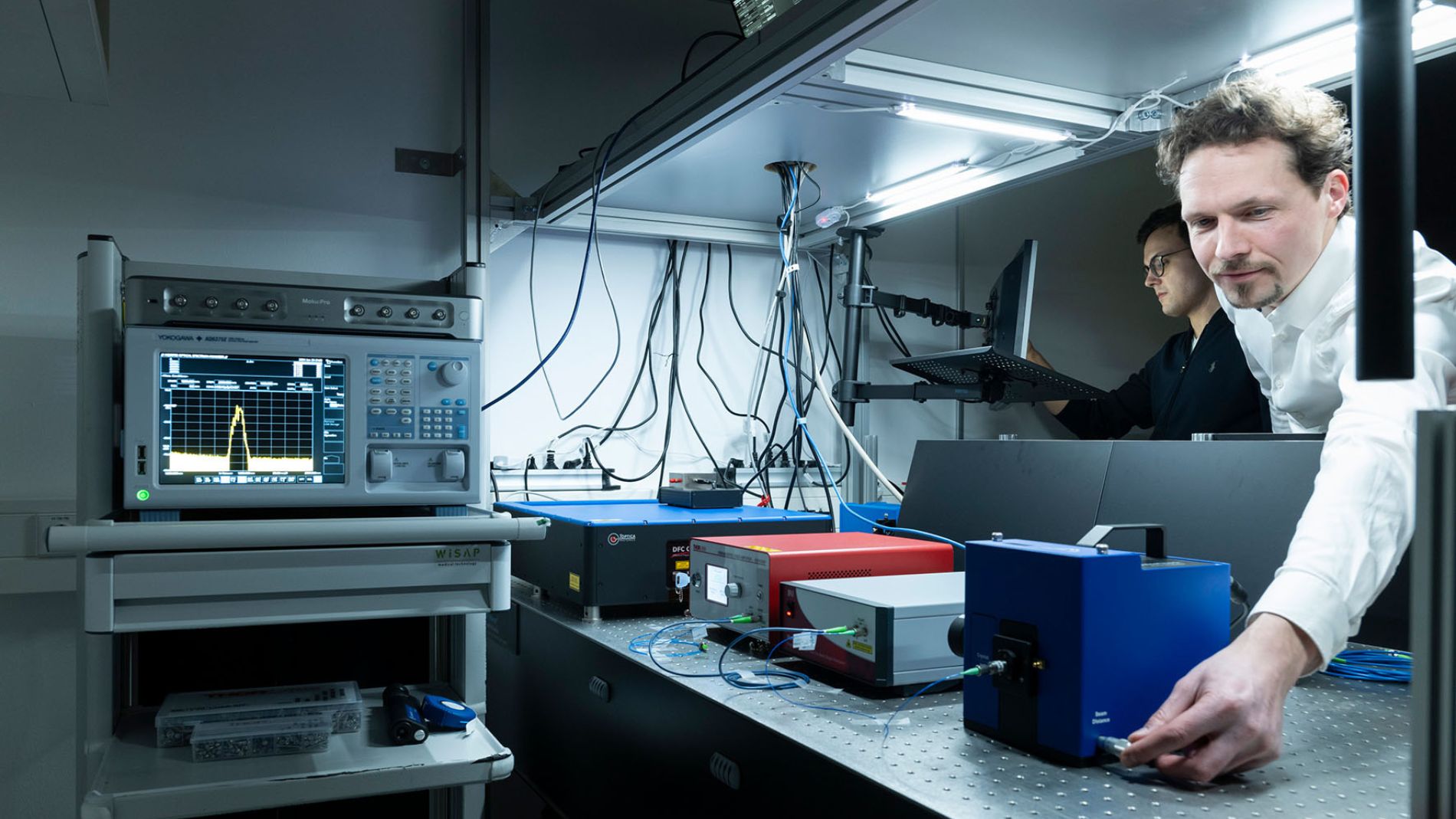

Dr Chemnitz: In our system, the lasers are well-defined, so there are no fluctuations and no noise. We know exactly what the light field looks like in terms of time and space. At the same time, the system is still too expensive. Our aim is to use a normal white light LED as a light source for a deep optical network. For this first test, which we are currently presenting, our network consists of one layer, it is low-threshold and has a limited application. Other groups are also working on bringing the processes to single photon level. The aim is to produce the vector matrix product, which can then ultimately filter out the structural information from the incoming light field. This is very impressive, as electronics could never achieve this performance so quickly and efficiently.

Data processing would become faster, the energy consumption of the AI would decrease – can you give us percentages to better understand the curves?

Dr Chemnitz: We are talking about a few femtojoules (fJ) of energy per arithmetic operation. That's 15 decimal places behind the decimal point of a joule. You could also say a millionth of a billionth of a part. So really very little. The fact that the light processes are so intrinsically energy-efficient means that the entire calculation is actually carried out passively, without any further energy having to be invested in the computing element. With our method, we hope to use 1,000 to 100,000 times less energy per computing operation from the optical components compared to today's highly efficient graphics cards from NVIDIA. We are currently still in the thousands and our demonstration has not reached the highest end of technical feasibility. We are currently continuing to work on increasing the efficiency of these projects. For example, to be able to perform a learning task with a latency of one nanosecond. This would also make us around a thousand times faster than the digital graphics cards that are currently available.

Would your technology be suitable for potentially energy-efficient data centres, which could thus dispense with an extensive electronic infrastructure?

Dr Chemnitz: We haven't got that far yet. However, I could imagine that in 20 to 30 years' time we will definitely have competitive full optical systems. What we can already use at the moment are hybrid solutions to accelerate the AI hardware. There are various start-ups that are focussing on the production of so-called accelerator chips for AI. These companies place an optical chip on an electronic digital chip in order to run the computing operations, which are heavy and energy-intensive for the electronics, via this chip. This includes the Fourier transformation, which many readers will be familiar with from image processing and data analysis. This is one of the basic operations for splitting an image into different frequency components, for example, and then filtering out high-frequency from low-frequency structures.

This also includes complex vector matrix multiplications. These are run in parallel on the optical chip. This is also visually very interesting: a fibre bundle coming from a light-emitting diode is translated into a photonic chip structure that sits directly above the digital chip. This realisation in itself is a masterpiece, as the electronics and the optics have difficulty communicating with each other. The electronics are generally somewhat slower than the optics, so there is a major technological bottleneck in the electronic to optical signal transfer rate. This is also being addressed in current studies, for example with the help of optimised modulation and detection techniques.

Photo: Sven Döring/ Leibniz IPHT

Your research could make it possible to dispense with thousands of electronic components in an extensive electronic infrastructure. Does this reduction also harbour risks, for example in terms of fragility?

Dr Chemnitz: That's a very good question. I would oppose: As far as electronics are concerned, we are at the end of scalability. In the current manufacturing processes, we are at a structure size of around five nanometres. Soon, one of the leading manufacturers will certainly reach a structure size of two nanometres and, to be honest, we no longer know how that will work physically. Trillions of tiny transistors are now placed on a typical wafer, i.e. the round silicon platforms. This reduction in the size of electronic components harbours the risk of error rates, so that there is already a parallel industry that was created solely to sort out the faulty chips.

There is therefore great hope in the development of analogue hardware, i.e. computing systems that do not calculate digitally with zeros and ones, but in the continuous number space. Unfortunately, all analogue computing systems have accuracy problems. In optics, we are faced with the challenge of setting a million optical transistors to a solid size, as the wavelengths of light and electrons are different. In other words, there is a structural difference in the wavelength of the corresponding particles that they use for the information process. The hope is that we will manage to achieve the necessary waveguide width and be able to utilise the inherent positive properties of photons, i.e. light particles. This means bringing together an infinite number of light particles in one place at the same time – this is not possible with electrons. The so-called ‘parallelism of light’ would thus be utilised effectively, also to compensate for the structural disadvantages on the wave rider. Then we face the second challenge: accuracy. If the measurement electronics are noisy, this can lead to minimal deviations and influence the intelligent decision-making process that is based on these values. It is particularly important for stock market and financial stocks that the technology does not produce any measurement errors. But we have to learn to deal with this. After all, our brain also operates with very noisy neuronal signals.

Alongside the challenges, the prospect would be more environmentally friendly AI and improved processes, such as for new methods in computerless diagnostics, more intelligent microscopy or digitalised processes in the construction industry. What steps are now important to ensure that this does not remain a dream of the future?

Dr Chemnitz: Over the last thirty years, we have discovered that our current technologies in optics enable the extensive calculations required for AI. We are now inventing one system after another for optimising the calculations of a wide variety of simple test tasks. These projects are very exciting, but the question at the end is: can the energy efficiency be maintained in a real application?

When the system is complete, that means a whole periphery that needs to be supplied with energy - the camera system, a light source and many detectors. Will it help me if I implement this new structure? Will I actually achieve an effective energy gain? So far, we have only been talking about the energy gain that a single computing step harbours. If we were to calculate with the energy that we draw from the socket, the overall picture would look more realistic. However, we are confident that we can solve this problem, as light sources are also becoming increasingly compact and efficient. We can also cover more and more neuronal layers in parallel and the more energy-efficient the system becomes. It is now important that we manage to demonstrate real applications with real data complexities.

Are there already enquiries from industry for your research?

Dr Chemnitz: Our work is currently a novelty in the field. We have opened up a more abstract way of thinking about how information processing can be understood. This is why the industry is still reluctant to make enquiries. But the realisations that already have several years of research behind them, such as the AI accelerators I mentioned, are definitely in demand from industry. Microsoft, for example, is currently building its own optical accelerator chip. There are start-ups, for example ‘Light Matter’ from the USA, which are developing a hybrid chip made of glass that is placed on the electronic chip. In Germany, there is the team around ‘Akhetonics’, which wants to realise a complete digital chip architecture fully optically. This means that everything that the current processor in the computer does will be done with light in the future. The hope is that these systems can also be used as a general processor for AI. There are numerous young companies that are trying to transfer research into industry. And that's exactly what we intend to do. However, we still need some time to bring development to the point where we can actually position ourselves competitively.

How great is the further potential of analysing the neuronal networks of the human brain for an optimised approach to AI? Where is the limit?

Dr Chemnitz: How high the potential of AI is estimated to be depends on who you ask. For many, it would certainly be a goal to replace a highly trained human specialist with AI and create an AI that also checks itself to solve the task in the process. This step would already be very far, but does not yet mean super intelligence. For this, the machine would have to carry out processes that humans are not capable of doing themselves. In my opinion, much of this is still a fantasy.

Do you see it as a future option for AI to work in a team with humans in order to improve itself, including in terms of energy efficiency?

Dr Chemnitz: We could certainly achieve this in the next ten years by means of general artificial intelligence. Sharing information with AI to optimise workflows and processes would be a huge boost for our general work. Capacities could be multiplied. If we had such systems, AI would be a kind of virtual colleague. But even virtual colleagues make mistakes, we must not forget that. And the question remains to what extent we can trust this virtual support. The systems that are currently being developed by large companies around the world can no longer be openly tested, and the question of data security would have to be answered reliably in advance.